Make3D

Convert your image into 3D model: Research/Technical

Learning 3-D Models from a Single Still Image

|

We consider the problem of estimating detailed 3-d structure from a single still image of an unstructured environment. Our goal is to create 3-d models which are both quantitatively accurate as well as visually pleasing. The algorithm uses a variety of visual cues that humans use for estimating the 3-D aspects of a scene. It starts with breaking the image up into a number of tiny patches. For each patch in the image, we use a Markov Random Field (MRF) to infer a set of "plane parameters" that capture both the 3-d location and 3-d orientation of the patch. The algorithm models both image depth cues as well as the relationships between different parts of the image. Other than assuming that the environment is made up of a number of small planes, our model makes no explicit assumptions about the structure of the scene; this enables the algorithm to generalize well to scenes that were not seen before in the training set. We then apply these ideas to: (a) predict depths from a single image, (b) incorporate both monocular and stereo cues to obtain significantly better depths than is possible using either monocular or stereo alone, (c) produce visually-pleasing 3-d models from an image to get rich experience in 3-d flythroughs created using image-based rendering, (d) create large scale 3-d models given only a small number of images, and (e) drive a remote-controlled car autonomously.

For an informal description, click here. |

| |||||||

Single Image

Single Image Snapshot of predicted 3-D Model

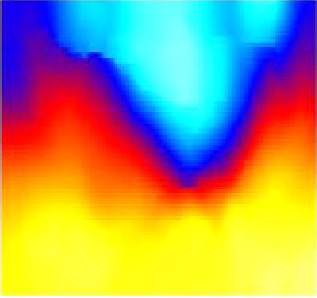

Snapshot of predicted 3-D Model Predicted Depthmap

Predicted Depthmap Mesh-view

Mesh-view